YouTube has become an essential part of the music industry. It’s a virtual venue where musicians can develop a following, release new music, stream events, communicate with fans, and even monetize their work. It’s also a popular way for consumers to discover music—but now artificial intelligence is here, and it is working hard to enshittificate YouTube. The ramifications show just how unprepared the world is for AI falling into the wrong hands.

Laws aren’t being generated fast enough to cover the myriad ways AI can be abused, nor would there be enough attorneys and courts to deal with the resulting lawsuits if those laws existed. Not only is the AI genie out of the bottle, the genie has acquired tactical nuclear weapons. Welcome to 2025.

How this plays out with respect to fraud, copyright and the value/ ownership of intellectual property will be a major story this year. Or, given how slowly the courts move, next year. Or, maybe the year after. Well, at some point. And to be clear, I am not anti-AI as a technology. AI doesn’t kill the value of intellectual property. People abusing AI do.

Here’s an example involving YouTube monetization. If you cover a copyrighted song on YouTube, the copyright owner can file a claim against you. If you don’t contest it, then the copyright owner receives any monetization from that song. That certainly seems fair.

But now we have insane scenarios, such as someone grabbing videos from TV shows like The Voice, including reaction shots from judges, where contestants sing a copyrighted song. Next, the scammer uses AI and greenscreen to layer their own likeness over the actual contestant doing the singing. The bogus singer represents this video as his own wowing of the show’s judges, claims the video as his own work, and collects hundreds of thousands or even millions of views—the key to monetization. And, hey, why not also create a bunch of additional fake channels? If one channel is shut down, the revenue generator can keep humming.

It gets worse. For example, Splice prohibits sample providers from submitting samples generated from pre-existing works, or that violate other agreements. So, you use them in your music. But then someone else creates a piece of music using the same loops, claims ownership of the song, and files a claim against you for using the loops because they were part of the person’s song. You can contest claims with YouTube, but it’s not necessarily an easy process. Sometimes it’s just not worth the trouble, or the risk of getting a copyright strike.

Or how about this: You create a piece of music and post it. Someone uses an AI song-generating program to create music based on your music, either from entering detailed prompts or simply feeding in your music as audio and telling AI to create something like it. It sounds like your music, but the scammer says that actually your music sounds like their music. So, the person who ripped you off now enters a claim against you. This means you have to fight for your monetization because you’re put in the position of defending yourself against a claim that you didn’t create the music you created.

This isn’t conjecture; it’s happening right now. Also note that AIgenerated music can’t be copyrighted—unless it has “sufficient human authorship.” Could the law be any more vague?

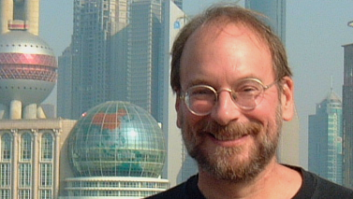

Craig Anderton’s Open Channel: Navigating the Survival of Sound

Fortunately, you’ll be happy to know that YouTube has just completed establishing airtight guardrails. The company has removed virtually all fraudulent music videos on its platform, blocked those who make specious claims, and restored proper monetization to the rightful owners. Kidding!

As far as I can tell, YouTube is overwhelmed. The service is way too gargantuan to monitor, let alone control and police. Sure, when you upload a video, you must state whether AI was used in the video’s creation—based on the honor system. Unsurprisingly, people with the intent to defraud or violate copyright don’t typically pay attention to honor systems.

YouTube has tried to be vigilant about infringement, saying in court documents that it has “spent over $100 million developing industry-leading tools” to prevent piracy and infringement. Some creators have sued YouTube and alleged that tools (like Content ID) for streaming services favor large companies, while smaller creators have fewer protections. In response, YouTube claims that “in the hands of the wrong party, these tools can cause serious harm.” I believe that. So, they decide if and when to allow access to tools like Content ID.

I truly sympathize with YouTube, which is in an untenable position. Every day sees an average of 3.7 million uploads. That’s around 1.35 billion videos uploaded yearly. Trying to police that content is like being one security guard with a broken ankle splitting his time among 200 Wal- Marts in six different states on Black Friday. So, YouTube has no realistic choice other than to rely almost completely on algorithms, with occasional human intervention. What could possibly go wrong?

And this is only the tip of the iceberg. I could go on about people scamming Spotify, using bots to increase views, the difficulties of collecting judgments, etc.

At this point, I’d normally offer a solution. But I don’t have any. Apparently, no one else does, either. Yes, AI is wonderful. But it can also be like giving flamethrowers to third-graders. Fasten your seat belts. There’s turbulence ahead.